As a professor by day, I spend a lot of time doing research and studying financial data. Recently, my research assistant and I have been digging deeper into the magic formula. We simply can’t figure out why the results posted in the Little Book are so extraordinary compared to what we have found.

Granted, there are certainly likely to be differences at the margin, but any robust strategy should be fairly impervious to slight changes in technique, time period, and methods.

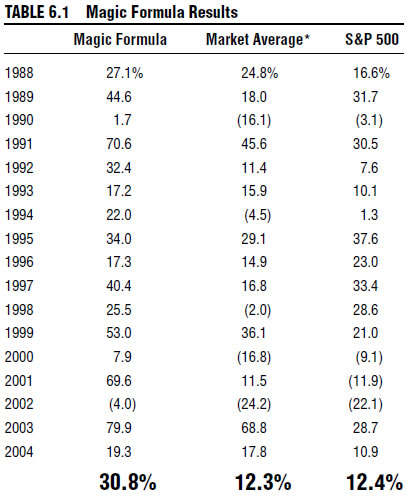

Here are the returns presented in the Little Book that Beats the Market:

The results are hypothetical results and are NOT an indicator of future results and do NOT represent returns that any investor actually attained. Indexes are unmanaged, do not reflect management or trading fees, and one cannot invest directly in an index. Additional information regarding the construction of these results is available upon request.

30.8% is breathtaking and will make you a multi-billionaire very quickly. Nonetheless, we can’t replicate the results under a variety of methods.

We’ve hacked and slashed the data, dealt with survivor bias, point-in-time bias, erroneous data, and all the other standard techniques used in academic empirical asset pricing analysis–still no dice.

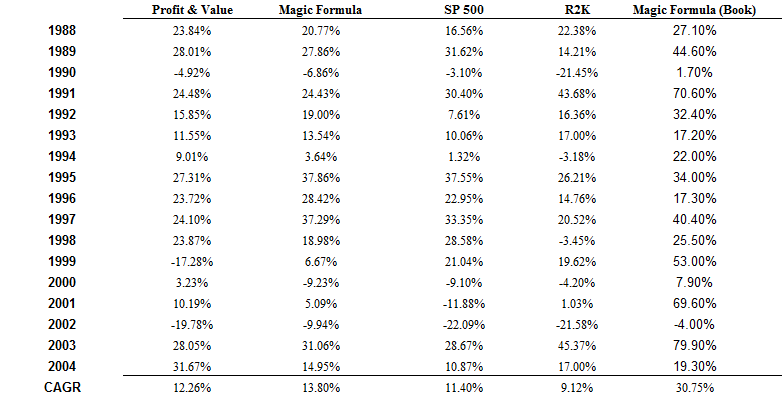

In the preliminary results presented below, we analyze a stock universe consisting of large-caps (defined as being larger than 80 percentile on the NYSE in a given year). We test a portfolio that is annually re-balanced on June 30th, equal-weight invested across 30 stocks on July 1st, and held until June 30th of the following year.

The results are hypothetical results and are NOT an indicator of future results and do NOT represent returns that any investor actually attained. Indexes are unmanaged, do not reflect management or trading fees, and one cannot invest directly in an index. Additional information regarding the construction of these results is available upon request.

We show major differences between our results and the magic formula results (30.75% CAGR vs. 13.80% CAGR). As a robustness check, we also analyse the performance of the Profit & value strategy, which is the “academic equivalent” to the Magic Formula strategy. Both the Magic Formula and Profit & Value strategy outperform the market, but none of them comes close to DOUBLING market returns.

Perhaps this is a size effect?

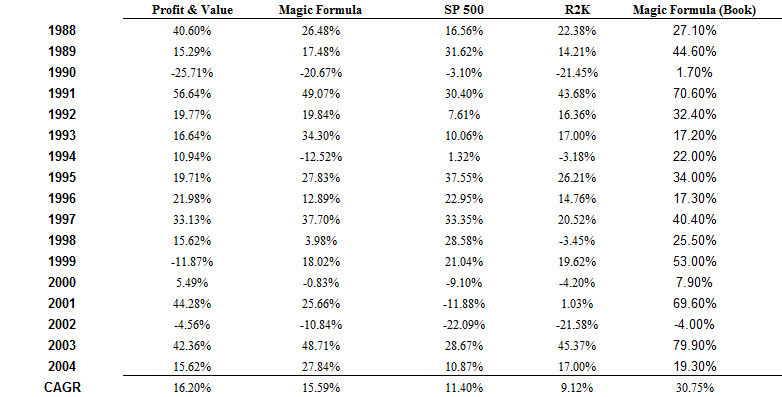

As an additional check, we looked at the results when the universe consists of small, mid, and large caps (defined as being larger than 20 percentile on the NYSE in a given year):

The results are hypothetical results and are NOT an indicator of future results and do NOT represent returns that any investor actually attained. Indexes are unmanaged, do not reflect management or trading fees, and one cannot invest directly in an index. Additional information regarding the construction of these results is available upon request.

Same story here: definitely some serious out performance on behalf of the special formulas, but nowhere near the 31% CAGR outlined in the book.

So what gives?

There are a list of possible conclusions that we can draw from this analysis:

- We screwed something up in our analysis.

- Greenblatt & Co. screwed something up in their analysis.

- The strategy is highly unstable (i.e., small back testing procedure changes have large effects).

I am fairly confident that we did a careful job in our analysis, and I am confident that Greenblatt & Co. did a solid analytical job. My guess is the magic formula back test performance is simply highly unstable, and small changes in assumptions/analysis can have dramatic effects on the performance.

Interestingly enough, I visited the Formulainvesting.com website and clicked on the live results of the Magic Formula: http://www.formulainvesting.com/actualperformance_MFT.htm

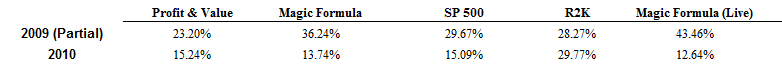

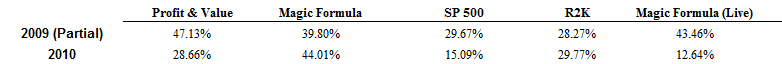

I then compared the 2009 (partial) and 2010 results (full year) against our back tested 2009 (partial year) and 2010 results (using a 80 percentile NYSE cutoff for size):

The results are hypothetical results and are NOT an indicator of future results and do NOT represent returns that any investor actually attained. Indexes are unmanaged, do not reflect management or trading fees, and one cannot invest directly in an index. Additional information regarding the construction of these results is available upon request.

From May 1 -Dec 31 2009 our back test results of the magic formula lagged the live performance of the Magic Formula. In 2010, our back test shows a 13.74% return, whereas the live Magic Formula earned 12.64% after fees.

But here are the results when we extend the universe market cap down to the 20 percentile market cap cutoff:

The results are hypothetical results and are NOT an indicator of future results and do NOT represent returns that any investor actually attained. Indexes are unmanaged, do not reflect management or trading fees, and one cannot invest directly in an index. Additional information regarding the construction of these results is available upon request.

2009 absolutely kills it, as does 2010–it is obvious that ‘magic small-caps’ are driving the back tested performance here. As one can see, the live magic formula dramatically under performs the back tested performance (likely because they had limited small/mid cap exposure).

Although anecdotal in nature, we can see from a very limited out of sample test (2009 and 2010) that the back test returns to a Magic Formula strategy is VERY unstable and results should be analyzed with a skeptical eye.

I’m a huge believer that there are slack in asset prices and the market is never perfectly efficient; however, I also believe that markets are highly competitive and prices tend to stay reasonably close to efficient. Applying this thought to the Magic Formula results, I would conclude that the strategy probably works at the margin, but expecting massive out performance, after controlling for risk, is foolhardy.

About the Author: Wesley Gray, PhD

—

Important Disclosures

For informational and educational purposes only and should not be construed as specific investment, accounting, legal, or tax advice. Certain information is deemed to be reliable, but its accuracy and completeness cannot be guaranteed. Third party information may become outdated or otherwise superseded without notice. Neither the Securities and Exchange Commission (SEC) nor any other federal or state agency has approved, determined the accuracy, or confirmed the adequacy of this article.

The views and opinions expressed herein are those of the author and do not necessarily reflect the views of Alpha Architect, its affiliates or its employees. Our full disclosures are available here. Definitions of common statistics used in our analysis are available here (towards the bottom).

Join thousands of other readers and subscribe to our blog.