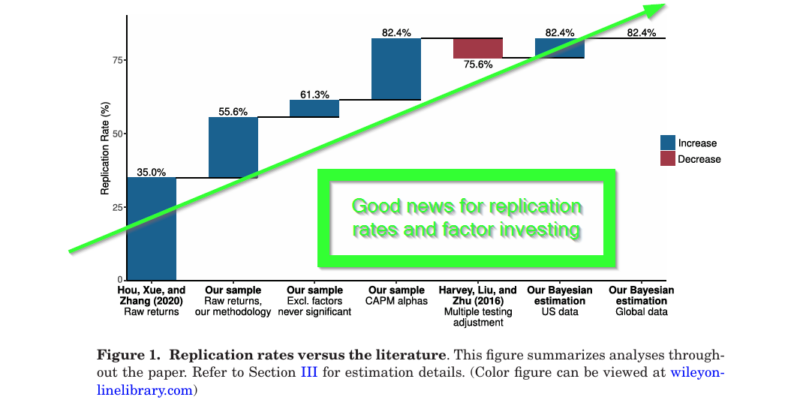

Do we have a chaotic “factor zoo” as some critics maintain? Is there a replication crisis in the research on factors? The authors of this research answer in the negative and argue that 82% of factors are replicable, the factor zoo is well-organized, and the factors are legit. Such bold statements given the 35% replication rate reported by other, more pessimistic studies. So, what’s the key? The authors use Bayesian statistics and find that the propagation of factors actually reflect valid characteristics of the markets and market fluctuations. That’s welcome news, for researchers, markets, and investors.

Is There a Replication Crisis in Finance?

- Theis Ingerslev Jensen, Bryan Kelly and Lasse Heje Pedersen

- Journal of Finance

- A version of this paper can be found here

- Want to read our summaries of academic finance papers? Check out our Academic Research Insight category.

What are the research questions?

- Are risk factors replicable and robust?

- Do factors retain replicability and robustness across global datasets?

- What is data mining and why does it matter?

What are the Academic Insights?

- YES. Overall, the results of financial studies can be replicated using the same methodologies and data. See Figure 1 below and note that Hou, Xue, and Zhang (2020) found only 35% of factors could be replicated. That number increased to 82% when more powerful statistical approaches such as Bayesian methods were used. Detail on the main results are presented in Figure1 in line with the corresponding research articles published. The replication rate is the percentage of factors with a significant average excess return in statistical terms. Note the increasing improvements in replication rates as methodologies evolve and become more powerful. In particular the Bayesian approach with a replication rate of 82.4% was shown to be superior. The first bar is the 35% replication rate reported in the factor replication study of Hou, Xue, and Zhang (2020). The second bar in Figure 1 shows a 55.6% replication rate in the main sample of U.S. factors analyzed in this new research. The difference is due to the longer time sample covered, the addition of more conservatively and robustly designed factors, and the inclusion of tests of raw and risk-adjusted returns. An additional 15 factors found in prior literature but are not studied by Hou, Xue, and Zhang (2020) were also included here. The main results clearly indicate a significant leap in replicability is offered by modern approaches.

- YES. Factors hold up when tested on new datasets, over different time periods, and across international markets. In this study, a global dataset of 153 factors across 93 countries is analyzed and the robustness of factors outside the U.S. is demonstrated. The replication rate remained steady at 82.4% on a global scale. Factors were combined into 13 themes (momentum, value, size, etc.). Eleven of the themes showed replication rates above 50%. Profitability, investment and size showed the weakest performance. Given that the data spanned a diverse set of markets, the concern over datamining or p-hacking, where the researchers unintentionally, or otherwise find spurious results by testing or “mining” too many hypotheses, one after another is likely overstated.

- A short tutorial on datamining, excerpted from: Smart(er) Investing, Basilico and Johnsen, 2019.

- “Cliff Asness (June 2, 2015) defines datamining as “discovering historical patterns that are driven by random, not real, relationships and assuming they’ll repeat…a huge concern in many fields”. In finance, datamining is especially relevant when investigators are attempting to explain or identify patterns in stock returns. Often, they are attempting to establish a relationship between characteristics of firms with returns, using only US firms in the dataset. For example, a regression is conducted that relates, say, the market value of equity, growth rates or the like, to their respective stock returns. It is important to note that the crux of the datamining issue is that a specific sample of firms observed at a specific time produce the observed results from the regression. The question then arises as to whether or not the results and implications are specific to that period of time only and/or that specific sample of firms only. It is difficult to ensure that the results are not “one-time wonders” within such an in-sample-only design.

- In his Presidential Address for the American Finance Association in 2017, Campbell Harvey takes the issue further into the intentional misuse of statistics. He defined intentional p-hacking as the practice of reporting only significant results when the investigator has conducted any number of correlations on finance variables; or has used a variety of statistical methods such as ordinary regression versus Cluster Analysis versus linear or nonlinear probability approaches; or has manipulated data via transformations or excluded data by eliminating outliers from the data set. There are likely others, but all have the same underlying motivating factor: the desire to be published when finance journals, to a large extent, only publish research with significant results.

- The practices of p-hacking and datamining are at high risk to turn up significant results that are really just random phenomena. By definition, random events don’t repeat themselves in a predictable fashion. “Snooping the data” in this manner goes a long way toward explaining why predictions about investment strategies fail on a going forward basis. Even worse, if they are accompanied by a lack of “theory” that proposes direct hypotheses about investment behavior, the failure to generate alpha in the real world is often a monumental disappointment. In finance, and specifically in the investments area, we therefore describe datamining as the statistical analysis of financial and economic data without a guiding, a priori hypothesis (i.e. no theory). This is an important distinction in that if a sound theoretical basis can be articulated, then the negative aspects of data mining may be mitigated and prospects for successful investing will improve.

- What is a sound theoretical basis? Essentially, sound theory is a story about the investment philosophy that you can believe in. There are likely numerous studies and backtests that have great results that you cannot really trust or believe in. You are unable to elicit any confidence in the investment strategy because it makes no sense. The studies and backtests with results that you can believe in are likely those whose strategies have worked over long periods of time, across a number of various asset classes, across countries, on an out-of-sample basis and have a reasonable story.

- The root of the problem with financial data is that there is essentially one set of data and one set of variables all replicated by numerous vendors or available on the internet that can be used. This circumstance effectively eliminates the possibility of benefiting from independent replications of the research. Although always considered “poor” practice by statisticians and econometricians, datamining has become increasingly problematic for investors due to the improved availability of large sets of data that are easily accessible and easily analyzed. Nowadays, enormous amounts of quantitative data are available. Computers, spreadsheet and data subscriptions too numerous to list here are commonplace. Every conceivable combination of factors can be and likely has been tested and found to be spectacularly successful using in-sample empirical designs. However, the same strategies have no predictive power when implemented on an out-of-sample basis. Despite these very negative connotations, datamining is not only part of the deal in data driven investing, it requires a commitment to proper use of scientific and statistical methods.”

Why does it matter?

The implications of this study are widely applicable. First of all, it’s not a “zoo” out there where factors proliferate at will. In fact, for the majority of factors, the replication crisis can be examined and understood. Instead of viewing factors as datamining artifacts, factors are actually complex, dynamic and reflect genuine economic insights. There is a caveat, however: the risk/return relationship that characterizes factors must be viewed as a cluster of economic themes, both in domestic and in global markets. Researchers are keeping up the good work by embracing methodologies that improve and enhance reproducibility and confidence, like the Bayesian methods documented in this study. In any case, be reassured that the factors used in portfolio construction, return assessment, and investment strategies are valid. (Larry’s write-up on this paper is here, for another perspective)

The most important chart in the paper

The results are hypothetical results and are NOT an indicator of future results and do NOT represent returns that any investor actually attained. Indexes are unmanaged and do not reflect management or trading fees, and one cannot invest directly in an index.

Abstract

Several papers argue that financial economics faces a replication crisis because the majority of studies cannot be replicated or are the result of multiple testing of too many factors. We develop and estimate a Bayesian model of factor replication that leads to different conclusions. The majority of asset pricing factors (i) can be replicated; (ii) can be clustered into 13 themes, the majority of which are significant parts of the tangency portfolio; (iii) work out-of-sample in a new large data set covering 93 countries; and (iv) have evidence that is strengthened (not weakened) by the large number of observed factors.

About the Author: Tommi Johnsen, PhD

—

Important Disclosures

For informational and educational purposes only and should not be construed as specific investment, accounting, legal, or tax advice. Certain information is deemed to be reliable, but its accuracy and completeness cannot be guaranteed. Third party information may become outdated or otherwise superseded without notice. Neither the Securities and Exchange Commission (SEC) nor any other federal or state agency has approved, determined the accuracy, or confirmed the adequacy of this article.

The views and opinions expressed herein are those of the author and do not necessarily reflect the views of Alpha Architect, its affiliates or its employees. Our full disclosures are available here. Definitions of common statistics used in our analysis are available here (towards the bottom).

Join thousands of other readers and subscribe to our blog.